ALTogether

Creating richer, more reliable alt-text

Presented at the 2021 Design Thinking for User Experience Design Expo at Stanford University and received awards for Most Societal Impact, Best Demo, and Best Pitch.

Overview

Images play such a huge role on social media platforms; however, the notion of alt text is unknown to many sighted users, hence leaving countless photos without alt text descriptions. ALTogether is an Instagram extension which helps sighted users to write quality alt text by modifying AI-generated suggestions on their images.

Developed with Haeli Baek, Jung-Won Ha, and Sydney Jones.

What's alt-text?

To interact with images on webpages and digital platforms, many people who are blind or visually-impaired use a screen reader to access written descriptions of these photos. This description is called alt text, and people can add it to images to make the visual information being conveyed accessible to those who can't see it.

Problem

After interviewing 6 blind and visually-impaired users, however, we unfortunately discovered that alt text isn’t included in the majority of images posted online. Sighted users often neglect to write an alt text before they think to upload a photo. One 2019 study found that a mere 0.1% of all images on Twitter included alt text, making the other 99.9% of images virtually inaccessible to many blind and visually-impaired users who rely on screen readers to navigate the digital landscape (How User-Provided Image Descriptions Have Failed to Make Twitter Accessible).

It’s not difficult to see why, as social media platforms often have a convoluted, hidden process for adding personalized alt text. The option to include alt text can sometimes be 3 or 4 extra clips deep into advanced settings, resulting in sighted users not knowing how to add alt text even if they wanted to be allies.

Some platforms attempt to circumvent this issue by providing auto-generated alt text that relies on artificial intelligence to analyze and describe the image programmatically. However, these auto-generated descriptions tend to be inaccurate and lacking in the context and nuance only a human can provide, often leaving blind and visually-impaired users feeling frustrated.

"One of my friends shared a photo on Facebook, and the auto-generated alt text read, "may contain sky, grass, bird,” which sounds really serene and idyllic! But the caption clarified the image was actually of a creepy, dead crow... The alt text didn't really capture the point of the image."

— One blind user whom we interviewed

Accessibility shouldn’t just be an afterthought that’s remedied with automation. We wondered, how could we make writing alt text easier and more fun from the start?

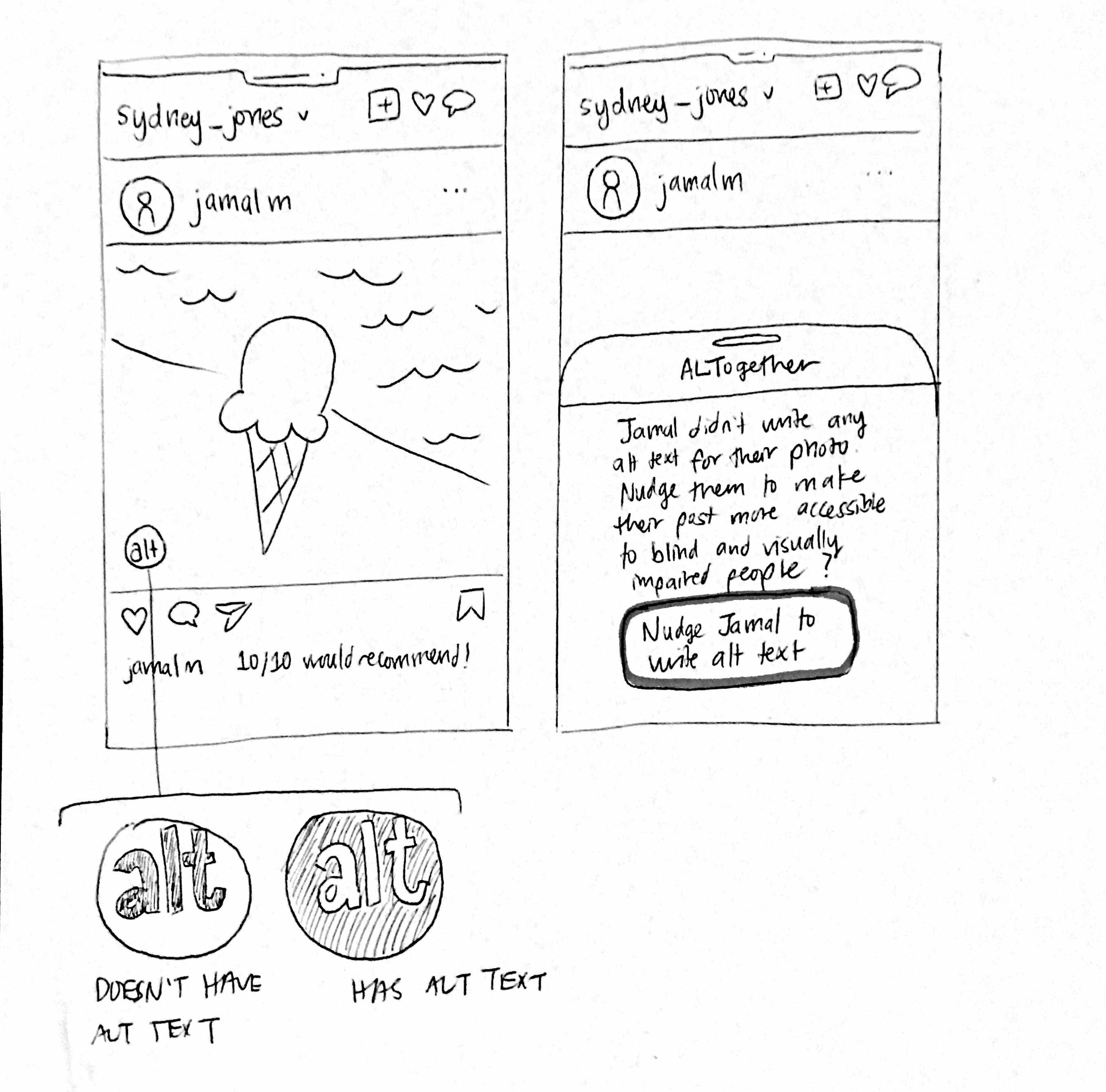

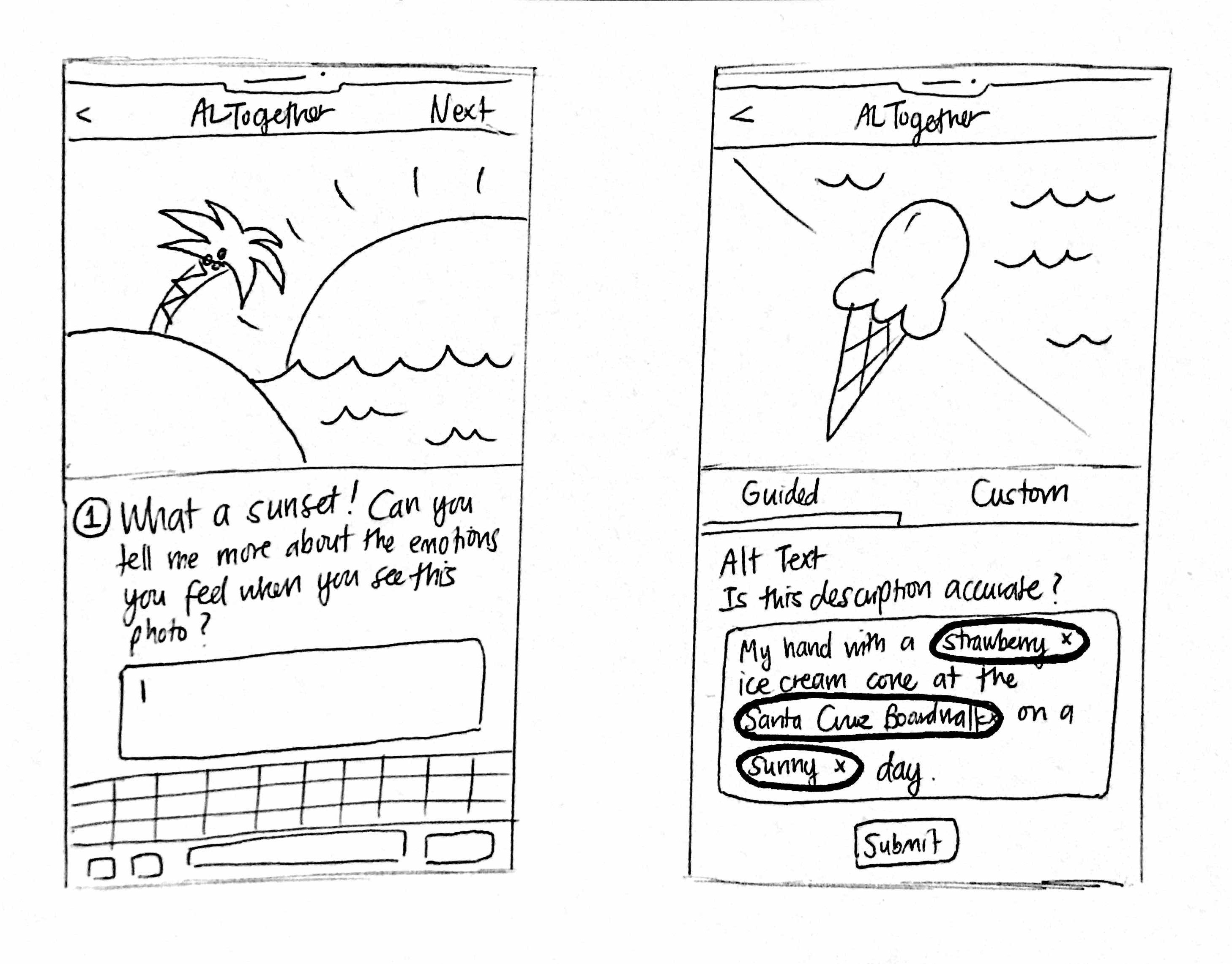

Prototyping

Because the issue here lies not with the disability but the lack of accessibility, we decided to focus our efforts on educating sighted users, directing the burden away from blind or visually-impaired users. Additionally, we selected Instagram as our target platform, as it is the most dependent on visual content.

We conducted 3 rounds of prototyping at low, medium, and high levels of fidelity, testing them with different sighted users who had varying degrees of familiarity with posting on the platform. From this testing, we validated our assumption that writing alt text isn’t primarily a question of time or effort but rather of awareness. Once users understood what alt text was, they liked the idea of writing it to make their feeds more accessible.

We also found that since most sighted users didn’t know what alt text was, they didn’t know what best practices to adopt when writing one. This revealed a tension between blind and visually-impaired users' needs for quality and accurate alt texts and sighted users' inexperience in writing them. Some alt texts they wrote were underdescriptive and others were paragraphs too long.

Sighted users didn't know which details were the most important to include when capturing the image.

Solution

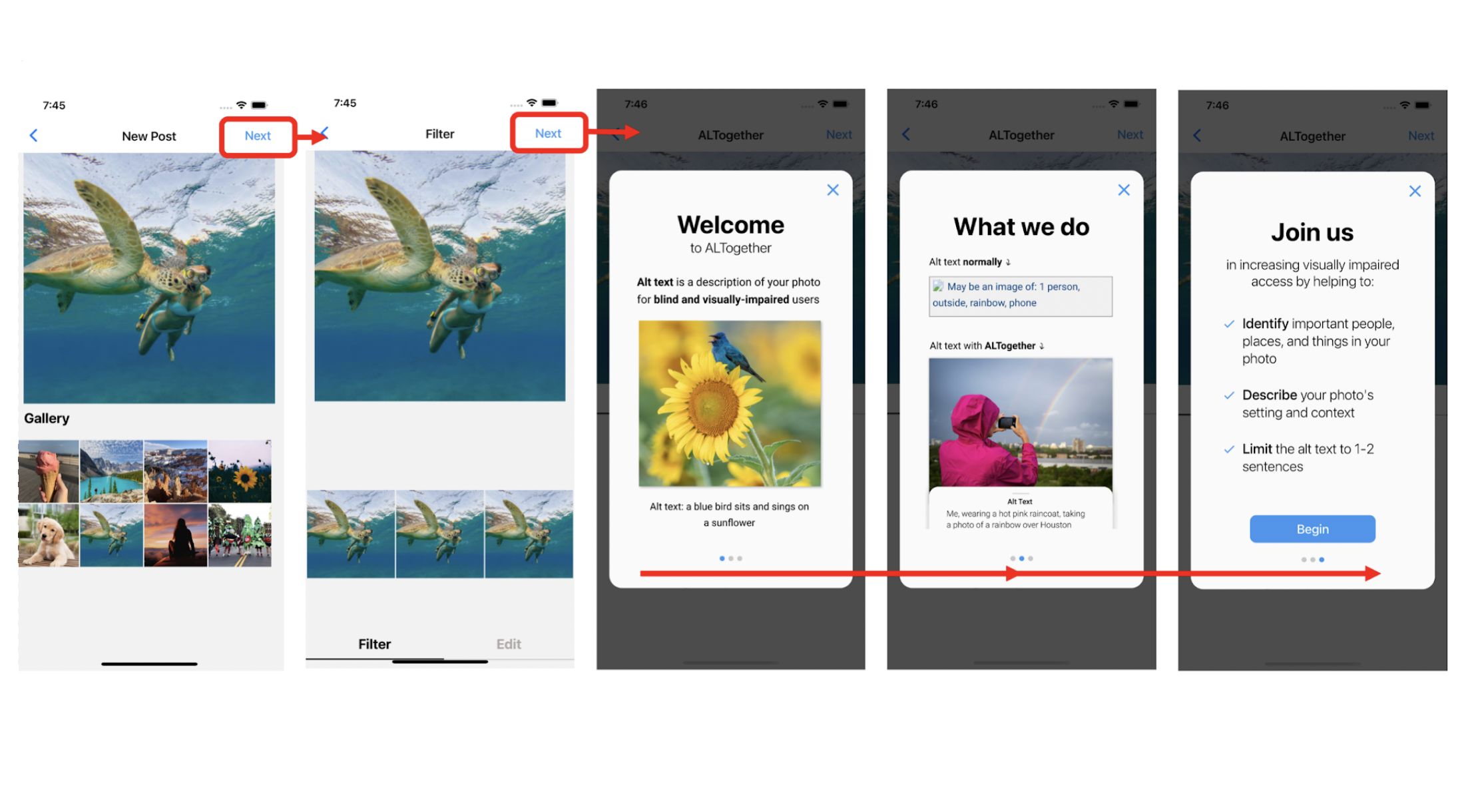

We designed ALTogether, an Instagram extension that brings alt text to the forefront.

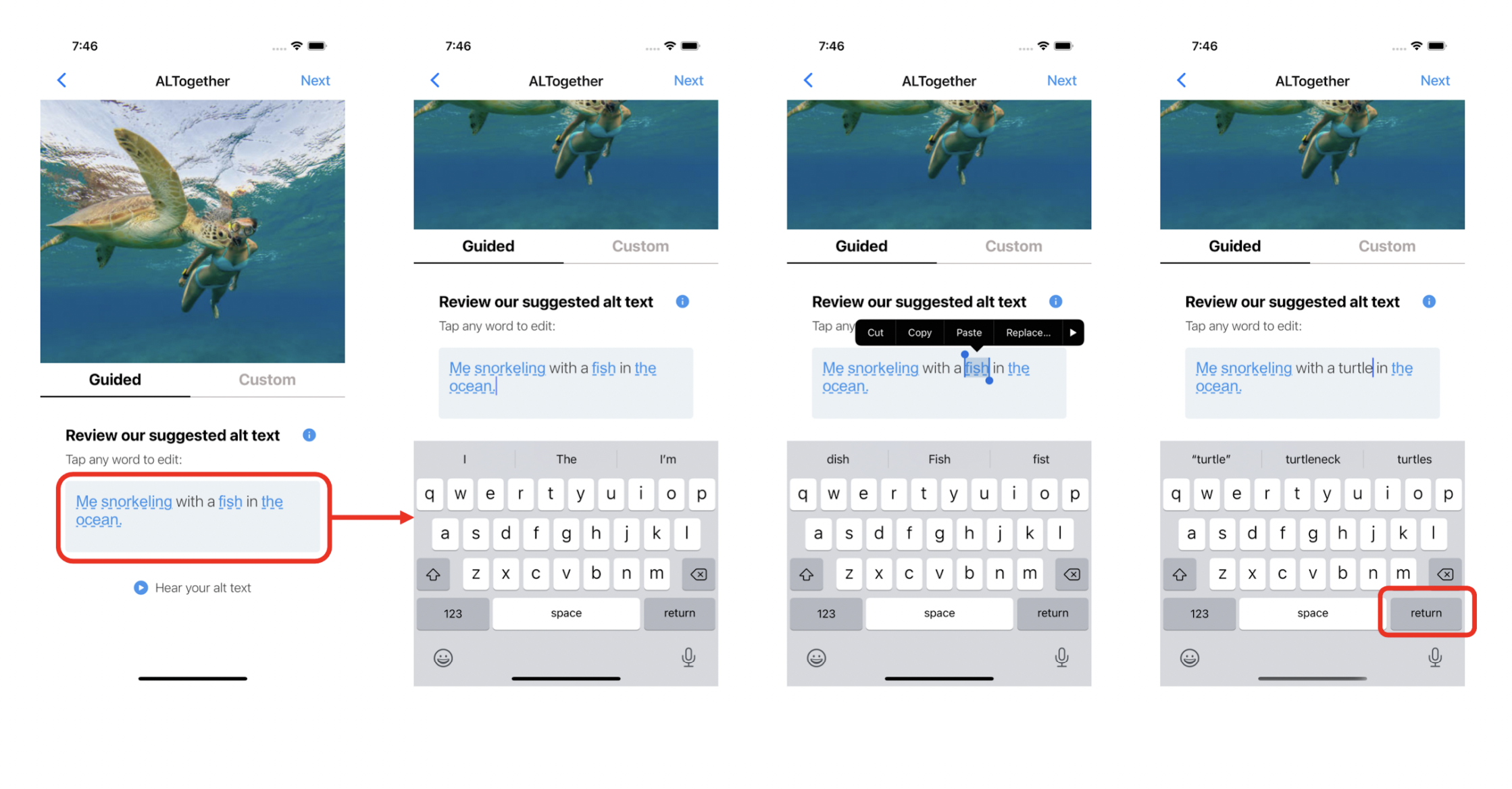

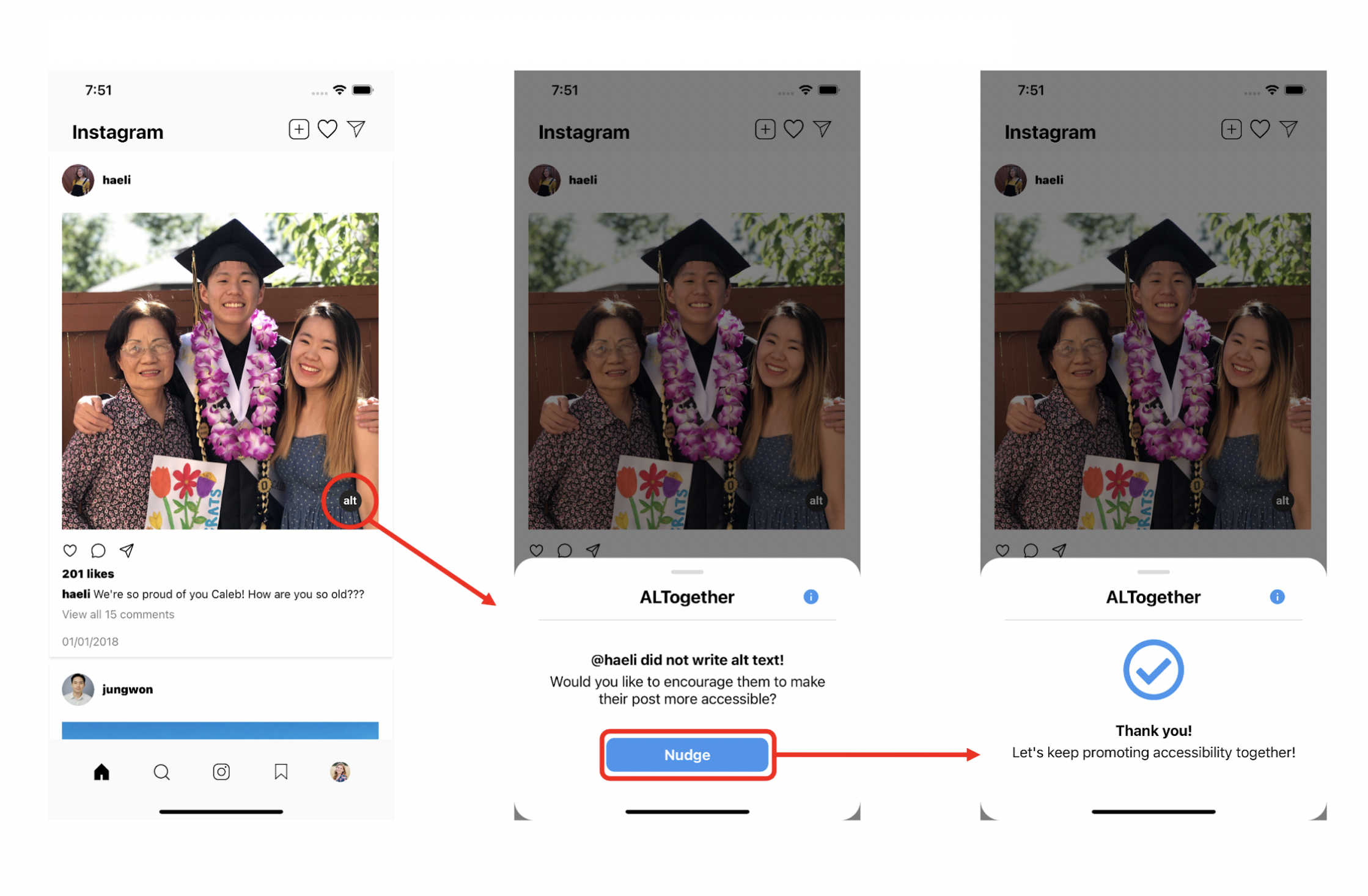

ALTogether seamlessly integrates the alt text writing process into the regular flow of posting a photo, making alt text (or the lack thereof) visible on all posts. Not only does ALTogether remind sighted users to include alt text, but it also guides them through the process of writing it, providing an auto-generated suggestion which they easily modify and personalize before posting. This helps ensure that alt texts written with ALTogether actually follow best practices without overwhelming the user to write an entire alt text from scratch.

Ultimately, ALTogether seeks to increase awareness of accessibility needs for blind and visually-impaired users, encouraging sighted users to adopt alt text practices and motivate their followers to do the same.

A walkthrough demo of our high-fidelity prototype, coded in React Native.

Tasks

To achieve this, we identified three main tasks for our design and implementation.

Task 1. Sighted users are reminded to write alt text and can find where to write it.

Task 2. Sighted users can modify auto-generated alt text suggestions to add personalized context to their images.

Task 3. Sighted users can identify which photos lack alt text and encourage friends to add their own alt texts.

Recognition

We presented ALTogether at the 2021 Design Thinking for User Experience Design (dt+UX) Expo at Stanford University and received awards for Most Societal Impact, Best Demo, Best Pitch, and Best Concept Video.